HR leaders are under pressure to make decisions that carry weight—who to redeploy, who to promote, where to invest in development, and how to close the skills gaps standing in the way of business execution. To solve for this, many organizations have turned to AI-driven skills platforms. These systems promise visibility into talent. What they often fail to deliver is insight leaders can act on with confidence.

The issue is that the data lacks the depth, clarity, and behavioral context required to make real decisions about people.

At the core of this failure is the skills ontology. Most platforms rely on frameworks generated by engineers using job board language and market taxonomies—systems that capture what skills are called, but not what they mean in practice. These ontologies don’t describe how skills evolve, how proficiency is demonstrated, or how capability connects to business value in different roles and environments.

Without that foundation, AI can surface patterns, but it cannot guide strategy.

This article breaks down why skills architecture needs more than speed or scale—and how Fuel50’s I/O psychologists build a smarter ontology that actually supports the people decisions HR leaders need to make.

Why AI alone isn’t enough to build a strong skills architecture

AI has changed the way skills data is processed. It can rapidly scan job descriptions, resumes, public labor market datasets, and learning content to identify patterns, tag capabilities, and generate connections between roles and competencies that would take humans weeks to map manually. The scale and speed of these systems are undeniable.

Yet scale alone does not equal insight.

The fundamental challenge is that AI models—especially when left unguided—treat skills as static keywords or interchangeable tokens, rather than behavior-driven capabilities that evolve over time, vary by context, and depend on how people actually apply them in real work. AI can detect associations between skills and roles. It cannot determine whether those associations reflect meaningful, high-performance behaviors within a given organization.

Many vendors use AI to auto-generate role profiles by analyzing market data, public job boards, and resumes. This often results in role definitions that are technically complete but behaviorally hollow. For example, two job titles might share a long list of overlapping skills, but how those skills are expected to show up—and at what proficiency level—can differ dramatically between industries, functions, or even departments inside the same company. A senior business analyst in a healthcare company and one in a fintech startup may both need “data storytelling,” yet the expectations, tools, pace, and business impact of that skill are entirely different.

AI cannot resolve that gap without help.

It cannot judge which skills are relevant in one context and redundant in another. It cannot define what “proficient” looks like in behavioral terms. It cannot detect whether a skill is a critical differentiator or a baseline expectation. And it cannot, on its own, embed fairness, inclusivity, or organizational nuance into the way those skills are interpreted and applied.

That’s where IO psychologists become essential.

When psychologists define skills, they do more than list them. They describe what they look like in action. They map how those capabilities develop over time. They tie proficiency to behavior, not assumptions. And they ensure the ontology isn’t just broad—but accurate, ethical, and strategically aligned to how the business actually works.

AI is a powerful tool for scaling analysis. However, a skills architecture that informs real workforce decisions requires more than automation. It requires intelligence that understands people.

“AI can scale insights, but only psychology can make them meaningful.”

What is I/O psychology, and why does it matter in skills tech?

Industrial and Organizational (I/O) psychology is a discipline focused on how people behave, perform, and grow at work. It is applied behavioral science—used to build systems that support better hiring, development, retention, and performance outcomes.

I/O psychologists study how people learn, how motivation works, how behaviors map to outcomes, and how potential is developed over time. In other words, they specialize in exactly the areas where most skills technology falls short.

This matters because defining and managing skills is not just an information problem—it is a behavioral problem. It is not enough to say that a role requires “strategic thinking” or “collaboration” or “problem solving.” Those labels mean nothing unless they are behaviorally defined, proficiency-leveled, and grounded in how that capability actually shows up inside a specific organization.

That’s what I/O psychology brings to skills architecture.

At Fuel50, I/O psychologists are not brought in after the fact to validate an already-built system. They are embedded at every level of the platform—designing the ontology, defining behavioral proficiency ladders, guiding how AI models interpret data, and shaping how roles and capabilities are structured for real-world decision-making.

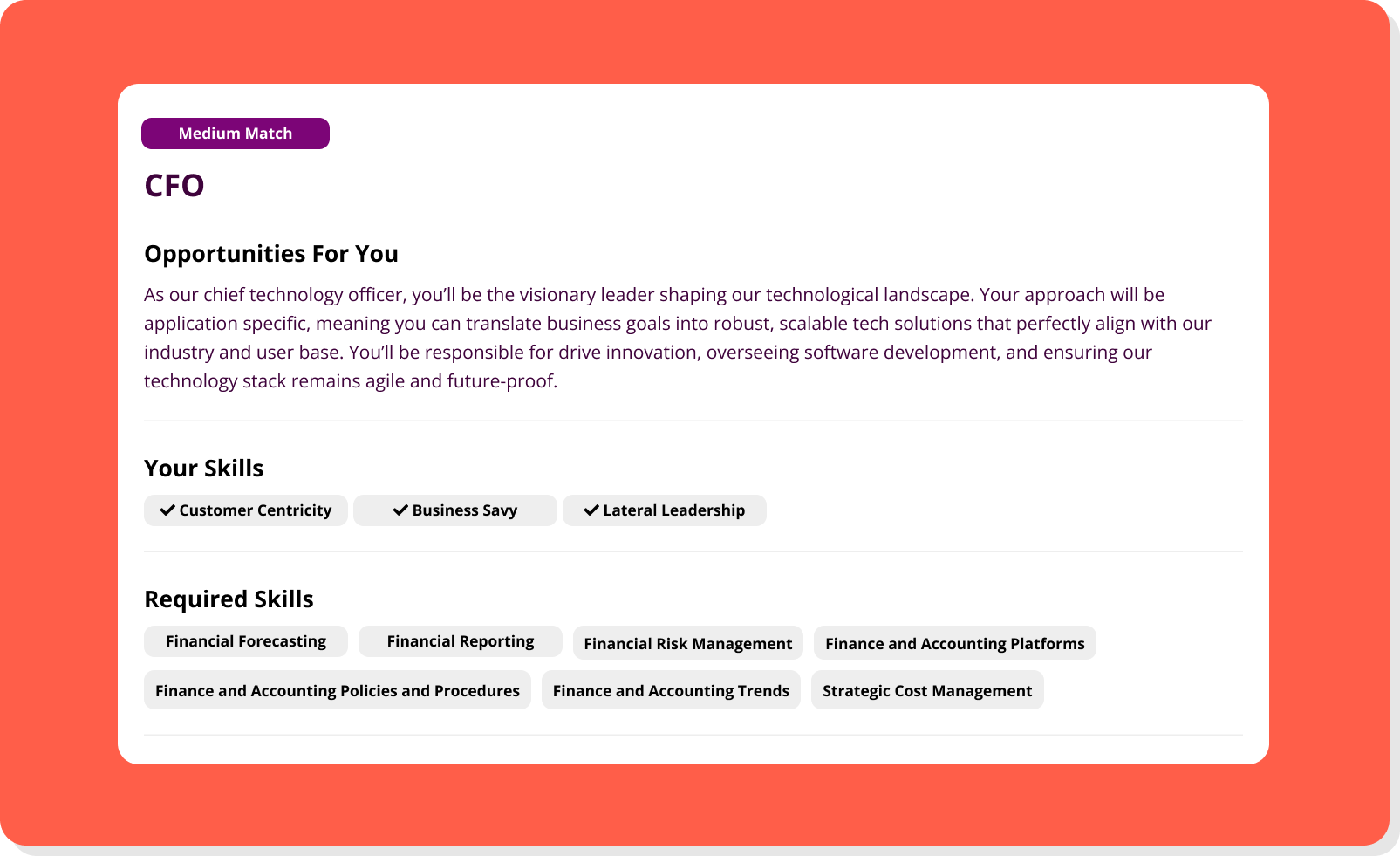

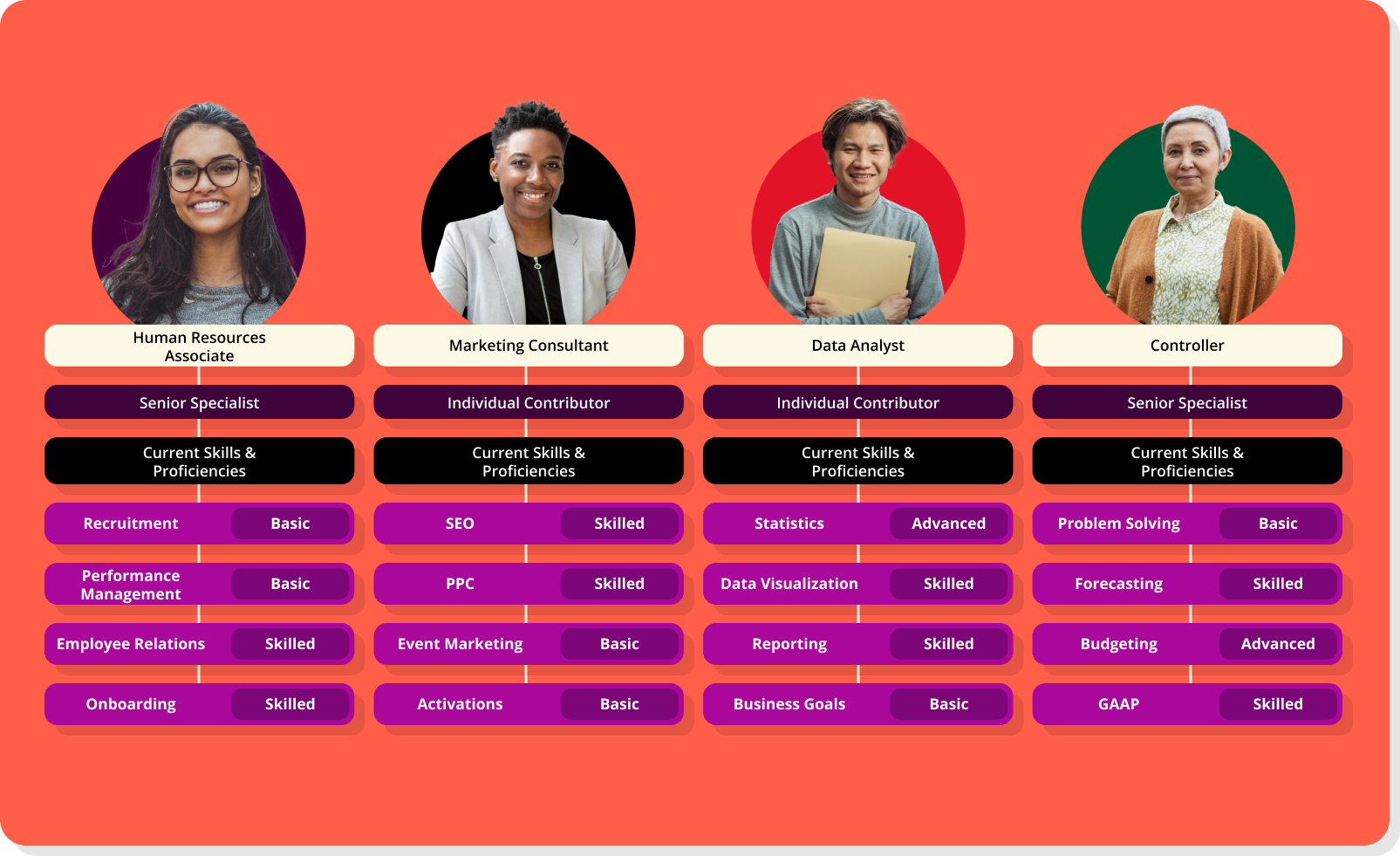

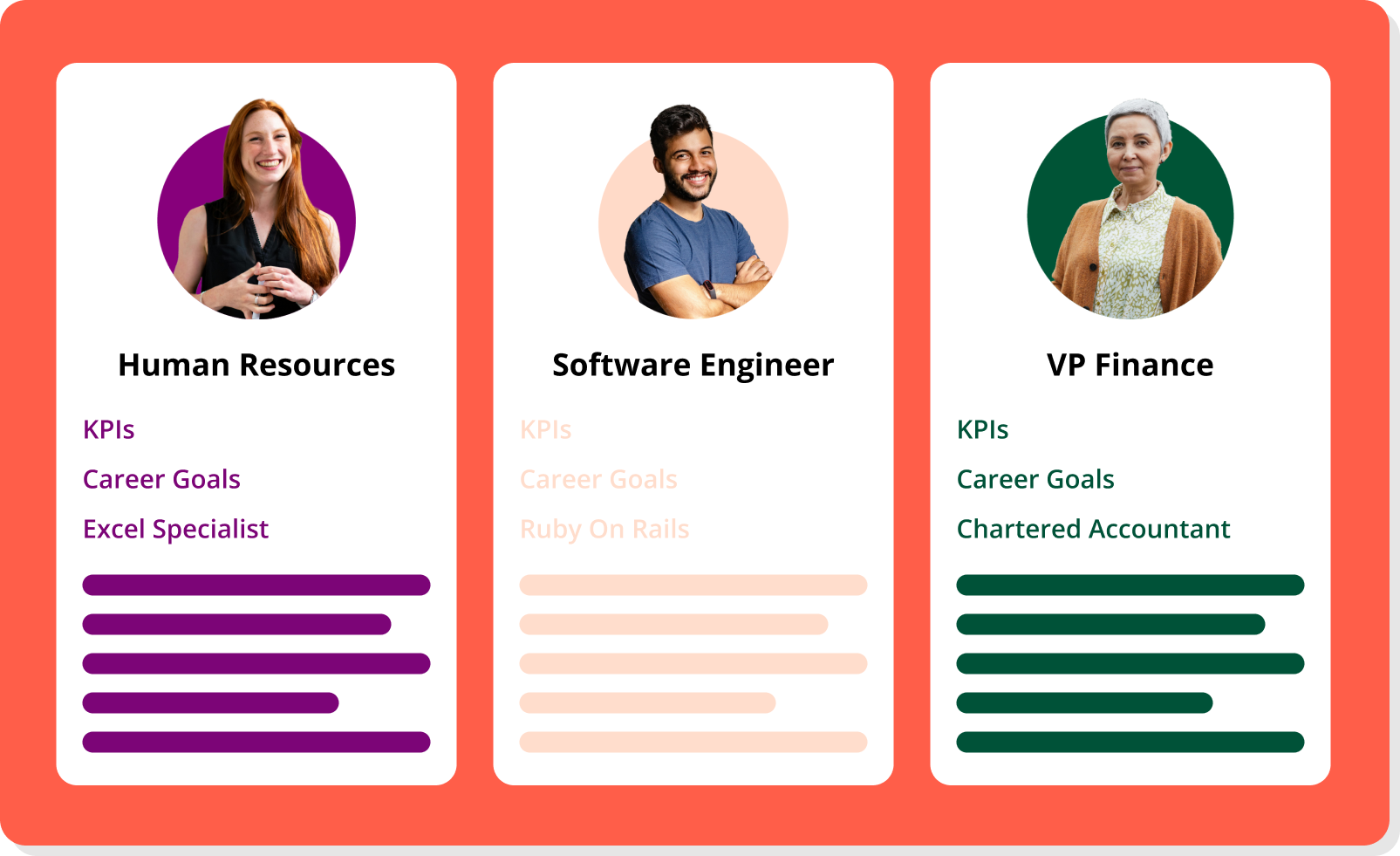

Their work ensures that the skills data flowing through the system is not just accurate—it is actionable. A manager does not just see that a team member has “communication skills.” They see how that skill has been demonstrated, at what level, and in what context. A leader does not just get a list of skills gaps. They see which gaps are mission-critical based on their strategy and workforce composition.

This level of behavioral specificity is what transforms a skills taxonomy from a static list into a real decision-making engine.

“If your skills ontology doesn’t understand how people actually perform and grow in real roles, it’s just a list.”

How Fuel50 uses I/O psychology to make its skills ontology more intelligent

The effectiveness of a skills ontology depends entirely on its ability to reflect how work actually happens. A list of capabilities pulled from job boards or market data may look complete on paper, but if it lacks behavioral nuance, organizational context, and accuracy in how skills develop over time, it becomes unhelpful when applied to real people in real roles.

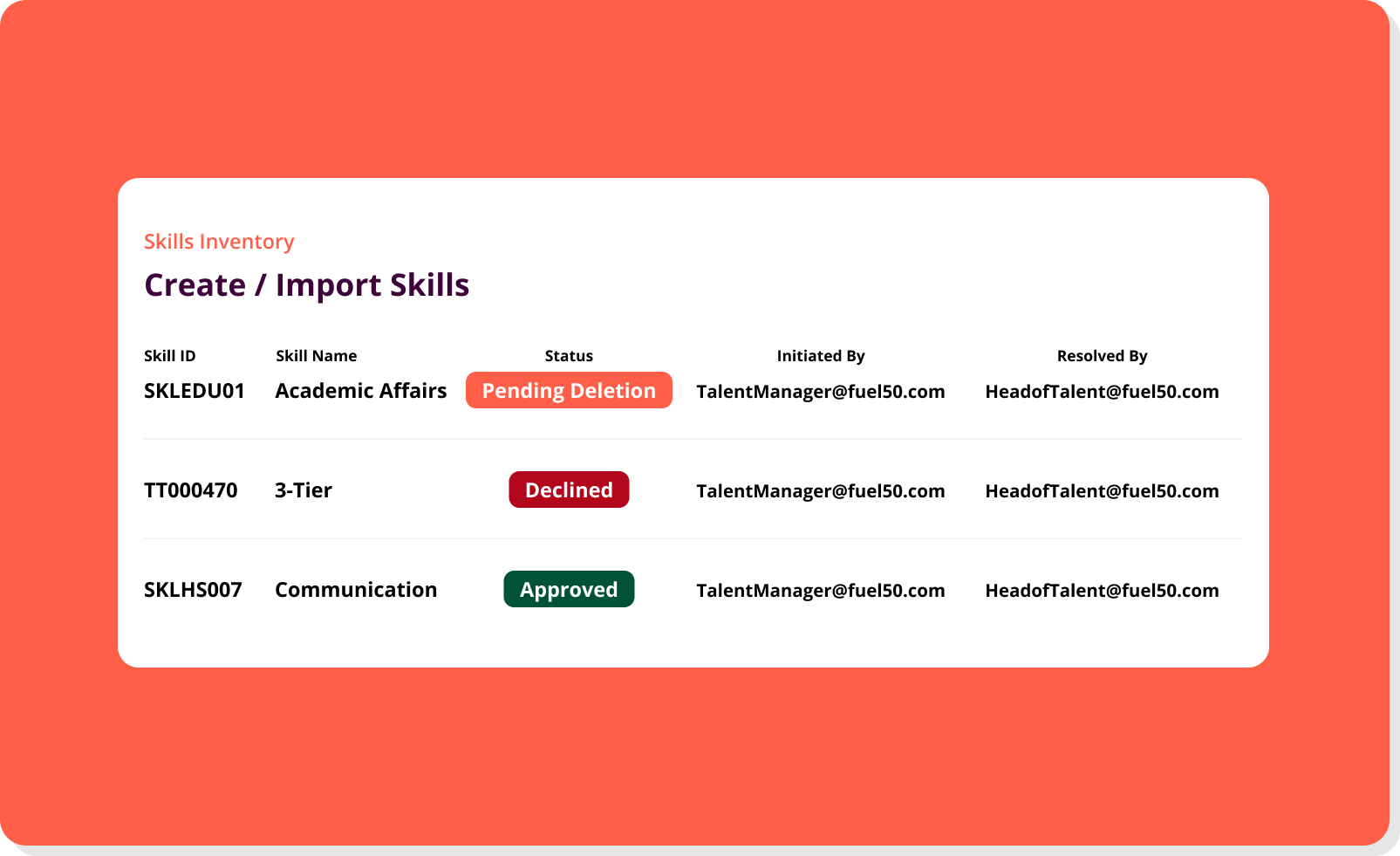

At Fuel50, we approach skills intelligence differently. Our ontology is not a static repository or an output of raw AI processing. It is a living system built by I/O psychologists, grounded in behavioral science, refined by real-world labor trends, and optimized through collaboration with engineers to support fairness, precision, and business relevance.

What follows is a breakdown of the specific ways our psychologists strengthen the ontology—and why it leads to smarter decisions for HR leaders.

Behaviorally informed skill definitions help HR teams move beyond labels

Most skills platforms start with market data and language patterns. Fuel50 starts with behavior. Every skill in our ontology is defined by I/O psychologists using their expertise in how people actually apply capabilities on the job. These are not vague labels. They are behaviorally anchored definitions, describing what the skill looks like in action, how it is applied in different settings, and what outcomes it supports.

This is critical for HR teams that need to assess more than whether someone has “strategic thinking” or “communication” on their profile. They need to understand what those skills mean in context: How do they show up in the employee’s day-to-day work? How do they contribute to performance? What differentiates a basic level of capability from mastery?

Behaviorally grounded skill definitions give HR teams clarity. They move skills from abstract concepts into practical, observable criteria that inform hiring, development, promotion, and mobility decisions. Instead of relying on keyword tagging or assumed skill matches, HR can see the real substance behind each capability.

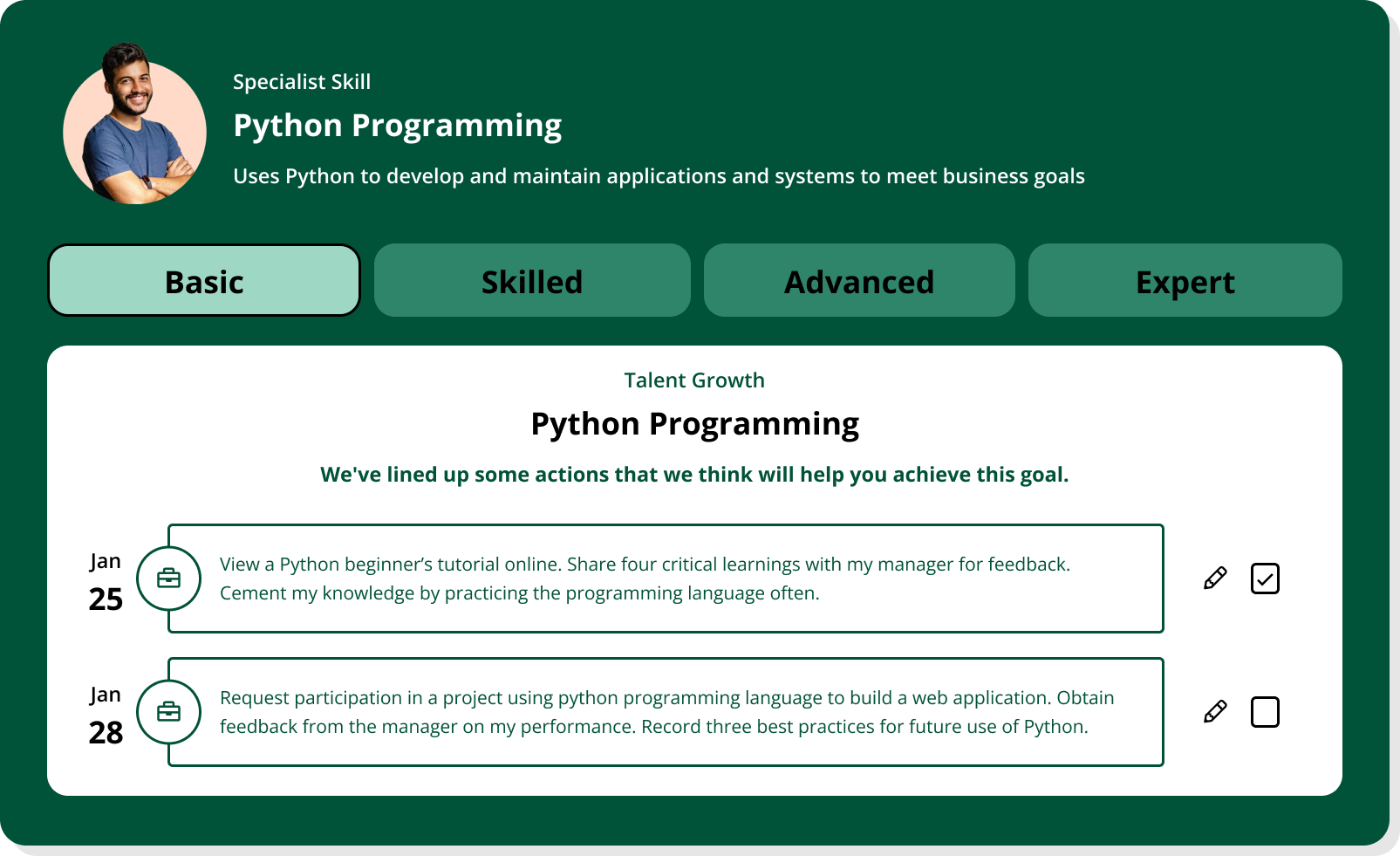

Proficiency levels are built to reflect how people actually grow

Too often, skill levels are treated as generic progressions: beginner, intermediate, advanced, expert. These levels may sound structured, but they lack behavioral specificity. What exactly changes between level two and level three? What does mastery look like in practice?

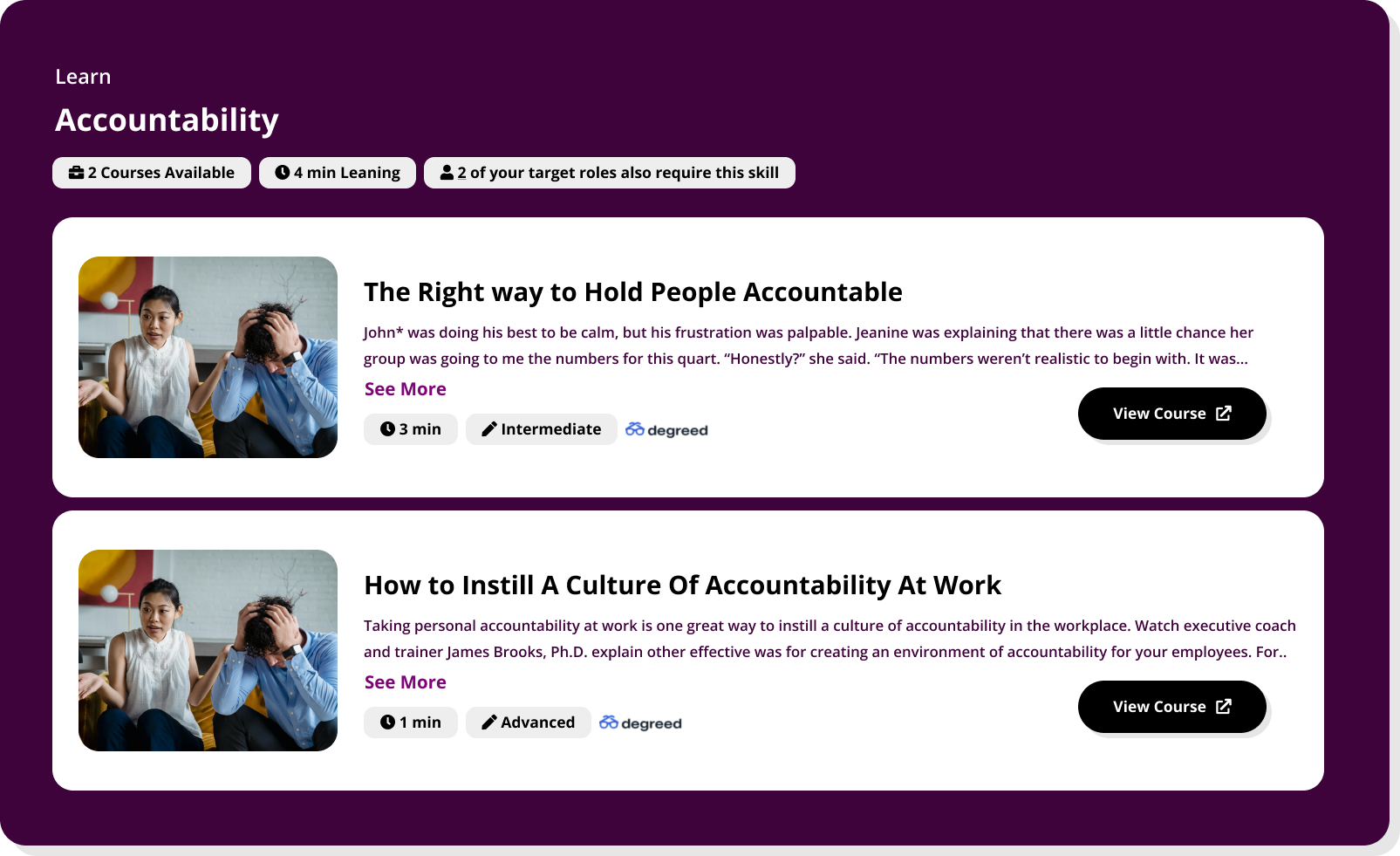

Fuel50’s ontology solves this by assigning every skill four clearly defined proficiency levels, each written by I/O psychologists to describe how the skill evolves across stages of development. These are not time-based assumptions or arbitrary tiers. They are behavioral indicators, showing how someone demonstrates a skill at each level, what tasks they’re capable of performing, and how their proficiency creates business value.

This helps organizations assess talent more accurately. It provides managers with concrete markers to guide performance conversations and coaching. It supports employees with clear developmental targets. And it gives HR the precision they need to align development efforts with organizational priorities—without guessing who’s ready for what role.

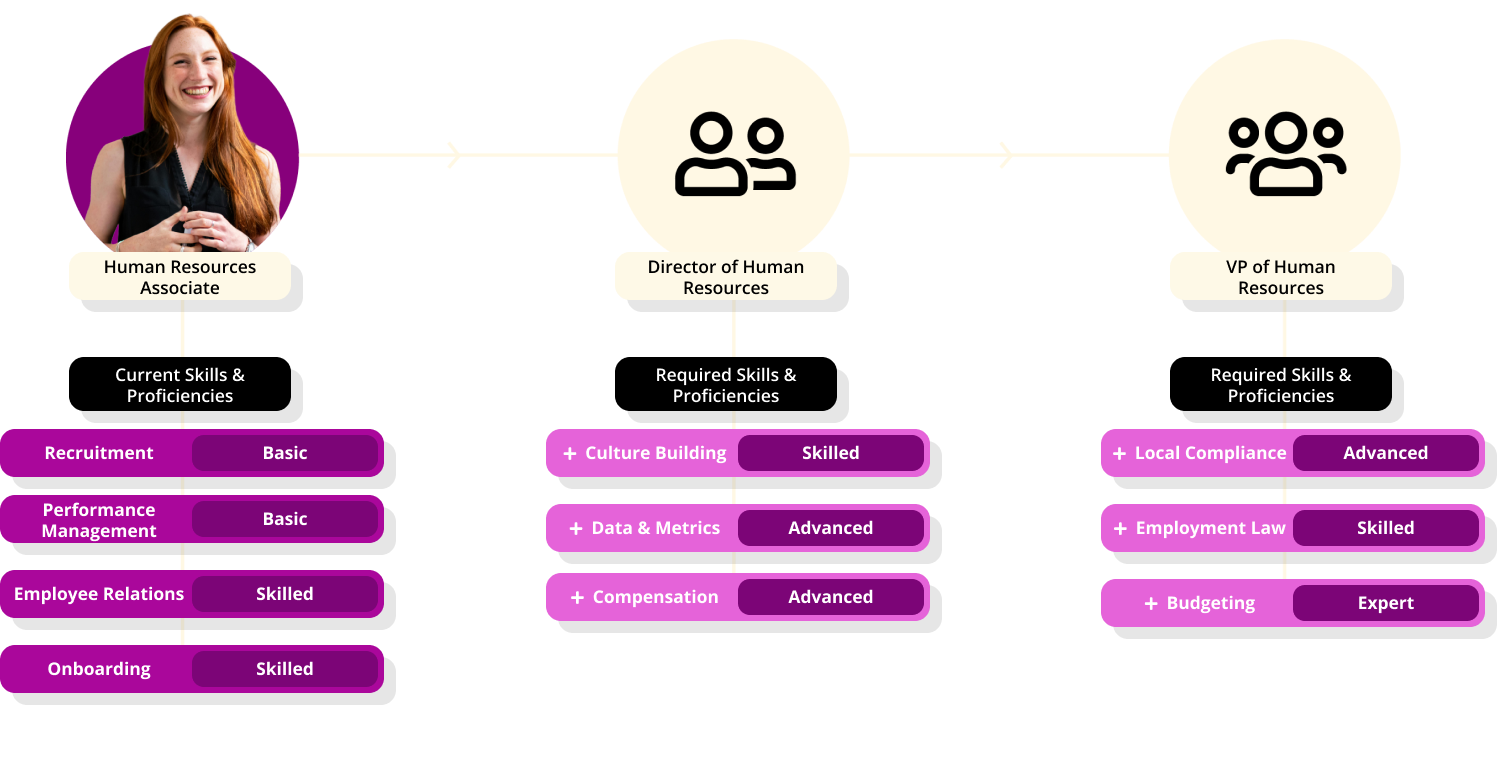

Contextual weighting ensures skills are interpreted in the right environment

One of the most overlooked issues in skills platforms is context blindness. A skill like “problem solving” or “leadership” does not mean the same thing across functions, geographies, or business models. Yet most platforms treat these skills as fixed attributes that apply equally everywhere.

Fuel50’s I/O psychologists account for that complexity. Through close collaboration with clients, they ensure that the weighting, relevance, and interpretation of each skill adapts to the organizational setting in which it appears. They consider factors like industry maturity, strategic objectives, company culture, and the role’s function within the business.

This allows the ontology to reflect how work is done, not just how work is titled. It lets companies distinguish between high-priority capabilities and generic requirements. It also supports better internal mobility by ensuring that skill matches aren’t just technically accurate—but functionally meaningful in the new environment.

For HR teams navigating reorgs, upskilling initiatives, or talent redeployment, this level of nuance is what prevents costly misalignments between workforce potential and business needs.

Labor market intelligence is integrated continuously—not bolted on

Most skills frameworks degrade quickly. The half-life of a capability is shrinking, and the pace of market evolution makes it impossible to rely on static taxonomies. Fuel50’s ontology remains relevant because our I/O psychologists continuously refresh and evolve the framework using real-time market data.

This isn’t just a manual update or periodic refresh. It’s a structured process in which emerging skills, role changes, and industry-specific shifts are reviewed, validated, and integrated by psychologists who understand which changes matterand which are noise. Our team distinguishes between a trend and a true inflection point in how work is evolving.

The result is a living ontology that evolves as the world of work does. HR teams using Fuel50 don’t need to worry about outdated frameworks undermining their planning. They are supported by a system that reflects today’s realities while anticipating tomorrow’s needs.

AI becomes more fair, relevant, and strategic with psychologists in the loop

Fuel50‘s approach to AI is grounded in human-machine teaming. Our engineers build models that scale, process, and surface patterns. Our I/O psychologists define what the models should look for, how outputs should be evaluated, and where bias or blind spots might emerge.

This collaboration ensures that our AI is not just fast—it is behaviorally accurate, ethically sound, and strategically aligned. Our psychologists work directly on the training, tuning, and validation of AI outputs. They test for fairness. They flag bias risks. They assess whether a skill inference makes sense in the role’s context. And they guide the model to learn in ways that respect individual and organizational diversity.

This is especially critical in high-stakes environments where skill recommendations influence hiring, promotion, and development opportunities. With psychologists in the loop, HR leaders can trust that the intelligence they’re seeing has been reviewed, stress-tested, and grounded in science—not just generated at scale.